slam_toolbox: map - odom transform stuck at time: 0.200

Hello,

After migration from Foxy to Galactic I got the following issue:

[planner_server-18] [INFO] [1643728496.934987109] [global_costmap.global_costmap]: Timed out waiting for transform from base_link to map to become available, tf error: Lookup would require extrapolation into the past. Requested time 0.200000 but the earliest data is at time 1643728495.556496, when looking up transform from frame [base_link] to frame [map]

The timestamp 0.2000 corresponds to the param transform_timeoutof the slam_toolbox(if I change this param, I get its value displayed in the message instead of the 0.2000) and is not updated, as if the TF was not updated.

This message get printed again and again, preventing my others nodes to works correctly. Sometime the issue disappears spontaneously after several seconds (but then get other messages).

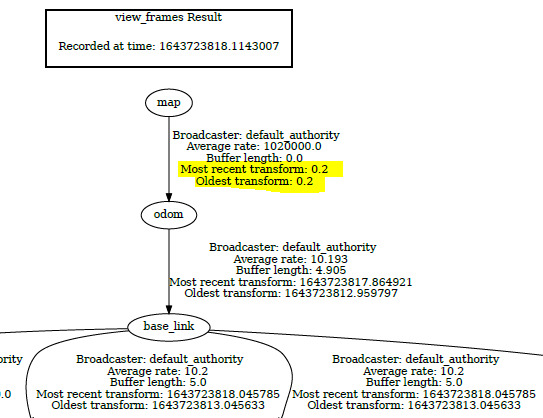

The TF tree looks like this, the TF seems never updated:

This is exactly the same issue as described by this user but on Reddit.

The TF map<->odom is published by the slam_toolbox in localization mode.

The Tf is regularly published in /tf topic and looks like this:

transforms:

- header:

stamp:

sec: 0

nanosec: 200000000

frame_id: map

child_frame_id: odom

transform:

translation:

x: 0.0

y: 0.0

z: 0.0

rotation:

x: 0.0

y: 0.0

z: 0.0

w: 1.0

What could be the root cause and how to solve this ?

ok, this issue and my other oneare somehow linked. Under Galactic, the slam_toolbox sets the timestamp of the TF map-odom with the timestamp of the last laserscan + transform_timeout offset (https://github.com/SteveMacenski/slam...). Under Foxy, it was timestamped with now() + transform_timeout offset (https://github.com/SteveMacenski/slam...).

I replaced in Galactic the laser timestamp by

now(), and my two issues got resolved... definitively some time issueAlso, slam_toolbox misses a lot of laserscan msg. A RCLCPP_INFO in the laser callback shows that sometimes, it is not called for several seconds (up to 10sec), while the scans are published at a solid 20Hz in

/scantopic