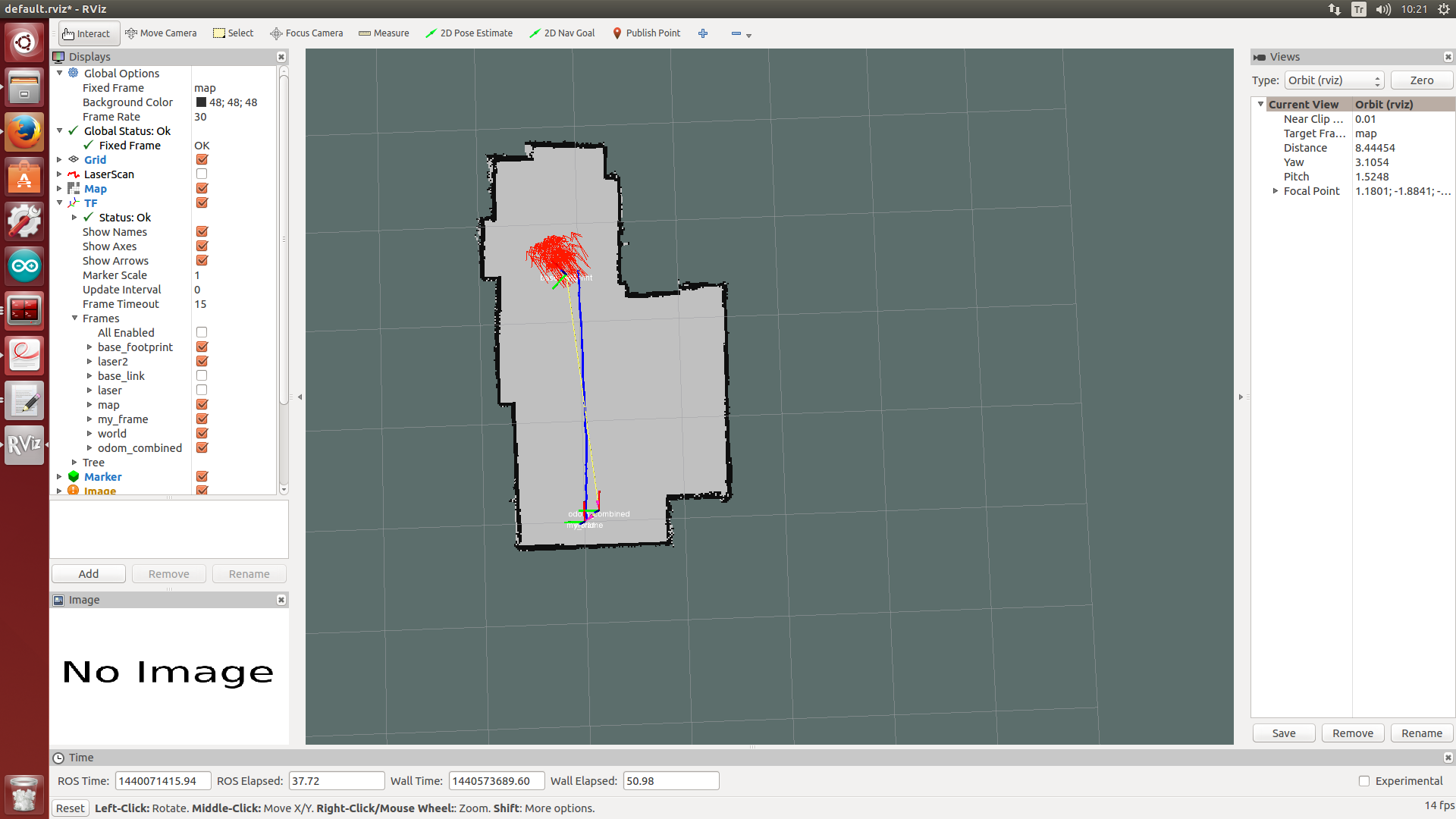

The new, with little spread should be better.

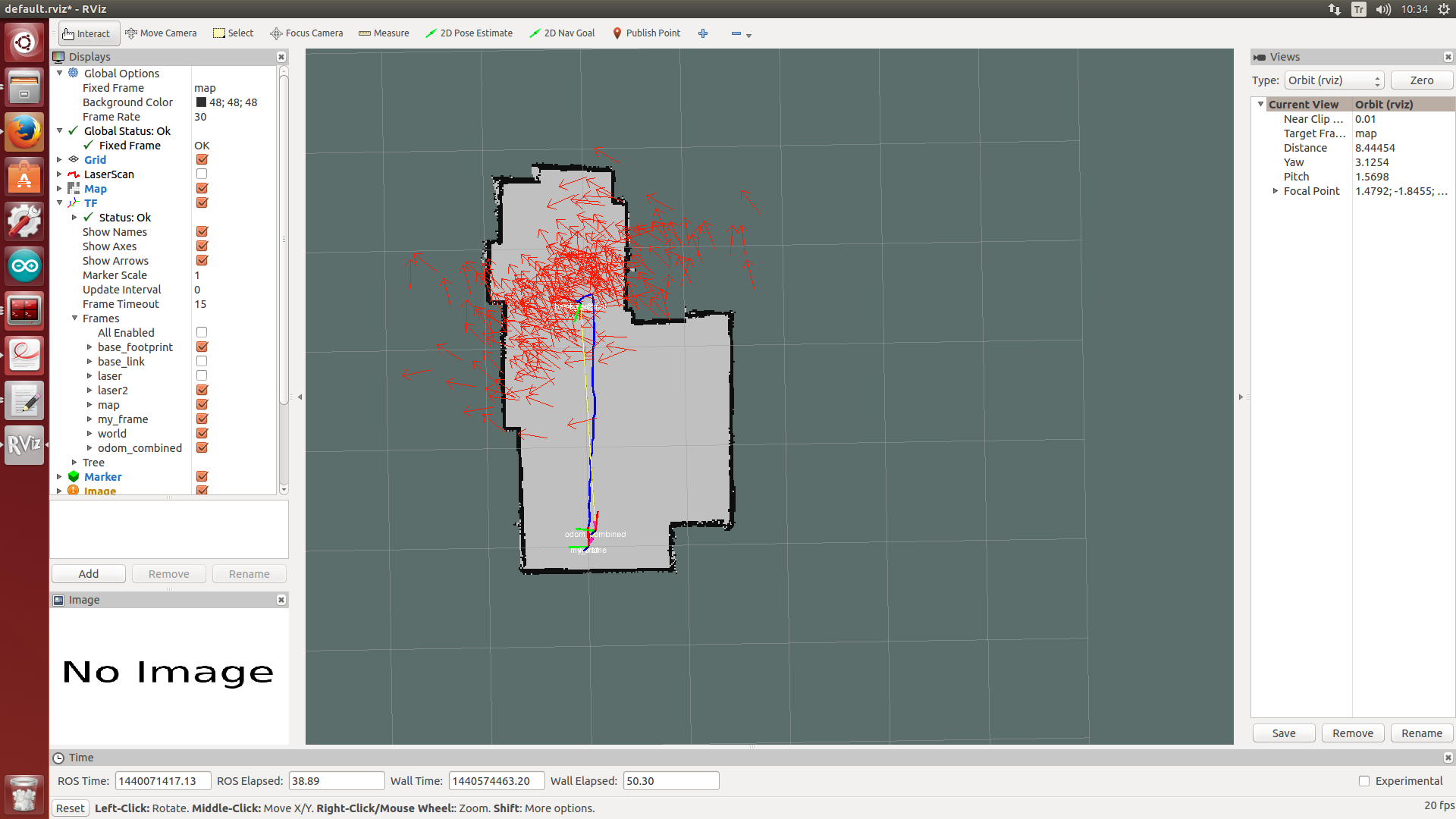

What is going on with the old image you shown is that the algorithms is not getting a good match of the laser sensed data correlated with the predicted state of your robot.

In simple words, what the filter does is to "spread" particles around in an attempt to get a better match from any of these random poses.

When you are getting a good correlation between the sensed data and and estimated position, the "spread" of particles is smaller, because the algorithm has a high confidence that the estimated pose is the correct one. In the other case, it needs to "spread" estimations farther.

Check this explanation of the monte carlo localization.

EDIT1:

I think you can say they are better, but you have to also take into account its stability and test it in different scenarios. If you have access of the ground truth position of your robot you can also measure the error in your localization. In the end, having a smaller "spread" of particles is an indication of good convergence, but you may have fallen into a local minima, so you cannot used that criteria alone to assess the quality of your filter results.