How to combine two pose estimates (from cameras) ?

Hi,

Update: I have managed to fix this issue, my only query now is that whether I can simply average the euler angle readings from multiple sources ?

=========

I am currently in a situation where I have multiple cameras and I wish to combine their measurements into a common frame: the world frame.

In order to do this, I use an April Tag and find the camera's world poses at the start and then use it later on.

So I use 4x4 Homogenous transformation matrices (T) and calculate my world pose like this:

T_world_to_robot = T_world_to_camera x T_camera_to_robot

.

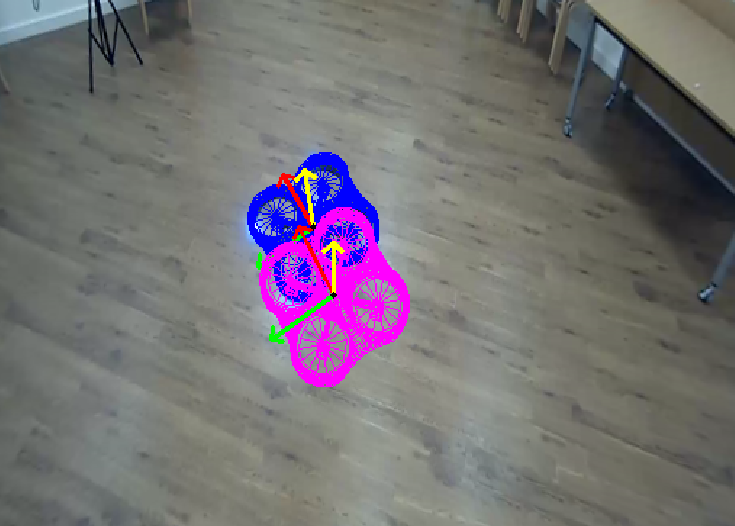

I am quite certain my T_camera_to_robot matrix is correct ( I visualise this by projecting a 3D mesh of the robot - please see image below), however I am getting poor results (x y and z coordinates are off by 50cm when the robot is close/far from the camera) for the world estimates from this equation. I average the world estimates obtained from each camera to produce the T_world_to_robot matrix.

Hence my question:

- Is this the right way to obtain the world pose of the robot when using multiple cameras ?

- Assuming someone provides the answer for my Q1, and I can get good world estimates, Can I just average (average the x,y,z coordinates and roll,pitch,yaw) the world estimates from each camera to obtain a combined result ?

The blue mesh is projected using T_camera_to_robot (and this seems to align perfectly)

The magenta mesh is the projected using T_world_to_robot