Why have different orientations and positions for same point clouds?

Hello

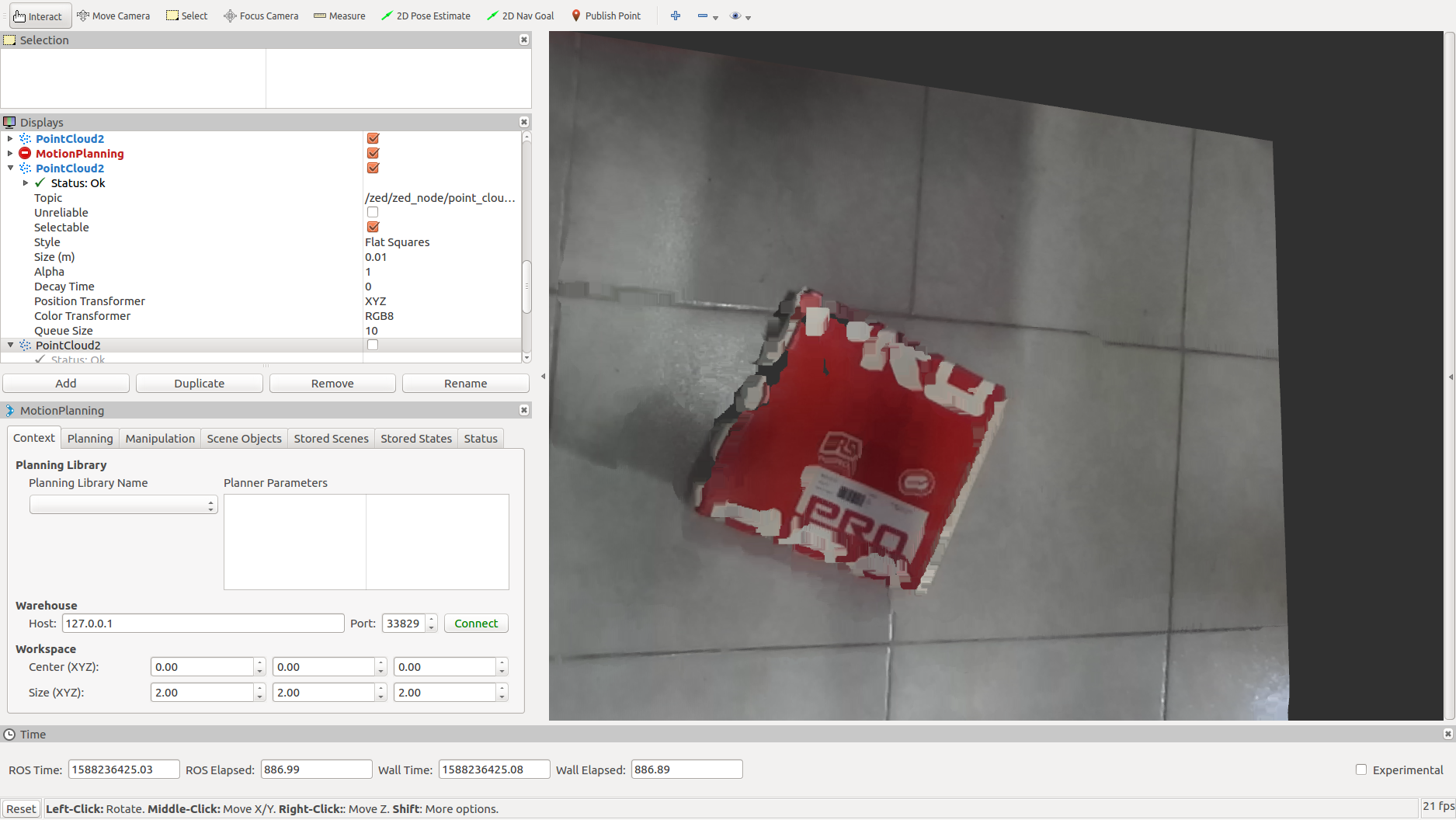

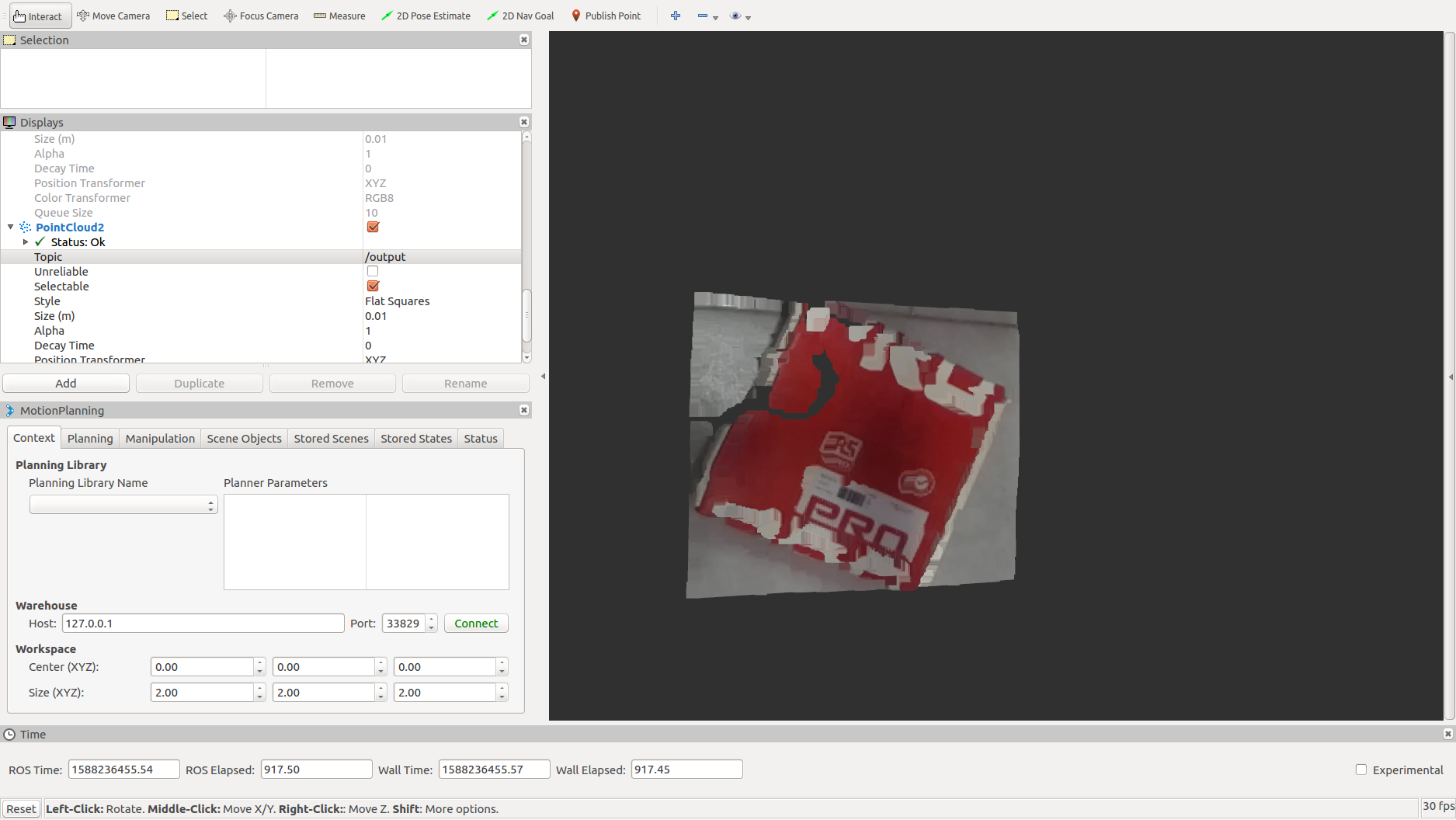

I would like to get the orientation and position od certain objects from point clouds. So, first I cropped the scene using the PCL CropBox filter like in PCL pcl::CropBox. Then used the PCL Moment of Inertia like in this PCL Tutorial link text. This is my code that compiles without errors. I include the whole point cloud scene and the cropped Box one pictures.

Here the C++ ROS code first

typedef pcl::PointXYZRGB PointC;

typedef pcl::PointCloud<pcl::PointXYZRGB> PointCloudC;

void cloud_cb (const sensor_msgs::PointCloud2& msg)

{

PointCloudC::Ptr cloud(new PointCloudC());

pcl::fromROSMsg(msg, *cloud);

//Crop Box

double min_x, min_y, min_z, max_x, max_y, max_z;

ros::param::param("crop_min_x", min_x, 0.6416);

ros::param::param("crop_min_y", min_y, -0.351);

ros::param::param("crop_min_z", min_z, -0.13453);

ros::param::param("crop_max_x", max_x, 0.97985);

ros::param::param("crop_max_y", max_y, -0.0371288);

ros::param::param("crop_max_z", max_z, 0.1379478);

Eigen::Vector4f min_pt(min_x, min_y, min_z, 1);

Eigen::Vector4f max_pt(max_x, max_y, max_z, 1);

pcl::CropBox<PointC> crop;

crop.setInputCloud(cloud);

crop.setMin(min_pt);

crop.setMax(max_pt);

PointCloudC::Ptr cropped_cloud(new PointCloudC());

crop.filter(*cropped_cloud);

sensor_msgs::PointCloud2 output;

pcl::toROSMsg(*cropped_cloud, output);

pub.publish(output);

//Moment of Inertia

pcl::MomentOfInertiaEstimation <pcl::PointXYZRGB> feature_extractor;

feature_extractor.setInputCloud (cropped_cloud);

feature_extractor.compute ();

std::vector <float> moment_of_inertia;

std::vector <float> eccentricity;

pcl::PointXYZRGB min_point_OBB;

pcl::PointXYZRGB max_point_OBB;

pcl::PointXYZRGB position_OBB;

Eigen::Matrix3f rotational_matrix_OBB;

float major_value, middle_value, minor_value;

Eigen::Vector3f major_vector, middle_vector, minor_vector;

Eigen::Vector3f mass_center;

feature_extractor.getMomentOfInertia (moment_of_inertia);

feature_extractor.getEccentricity (eccentricity);

feature_extractor.getOBB (min_point_OBB, max_point_OBB, position_OBB, rotational_matrix_OBB);

feature_extractor.getEigenValues (major_value, middle_value, minor_value);

feature_extractor.getEigenVectors (major_vector, middle_vector, minor_vector);

feature_extractor.getMassCenter (mass_center);

Eigen::Vector3f position (position_OBB.x, position_OBB.y, position_OBB.z);

Eigen::Quaternionf quat (rotational_matrix_OBB);

cout << " orientation x = " << quat.x() << endl;

cout << " orientation y = " << quat.y() << endl;

cout << " orientation z = " << quat.z() << endl;

cout << " orientation w = " << quat.w() << endl;

cout << " postion x = " << position_OBB.x << endl;

cout << " postion y = " << position_OBB.y << endl;

cout << " postion z = " << position_OBB.z << endl;

}

int main(int argc, char ** argv)

{

ros::init(argc, argv, "test_pcl_ros");

ros::NodeHandle nh;

ros::Subscriber sub = nh.subscribe("/zed/zed_node/point_cloud/cloud_registered", 10, cloud_cb);

pub = nh.advertise<sensor_msgs::PointCloud2> ("output", 10);

ros::spin();

}

Here the point cloud images.

First the whole scene.  Then the cropped Box.

Then the cropped Box.  Here the output:

Here the output:

orientation x = -0.381498

orientation y = 0.378691

orientation z = 0.746498

orientation w = -0.392165

postion x = 0.73152

postion y = -0.175997

postion z = 0.00919689

orientation x = -0.385631

orientation y = 0.426972

orientation z = 0.682854

orientation w = -0.450215

postion x = 0.74303

postion y = -0.180681

postion z = 0.000638498

orientation x = -0.370232

orientation y = 0.333529

orientation z = 0.798585

orientation w = -0.337563

postion x = 0.730808

postion y = -0.171258

postion z = 0.0171869

orientation x = -0.377332

orientation y = -0.371774

orientation z = 0.397294

orientation w = 0.749374

Basically the orientation w change. But why?

Or should I do first planar ...

Those quaternions look like they are very close to 180 degrees away from each other. Moreover, your object seems to have quite a bit of symmetry. To me it seems quite possible that two of the eigenvalues and corresponding eigenvectors could have switched places.