Localization problem with gmapping and RPLidar

Hello ROS community,

I'm working on a project which goal is to create an autonomous mobile robot running SLAM algorithm using gmapping. I'm using odometry from motors encoder and laser scan data from RPLidar. ROS Hydro is running on a UDOO board.

I complete the navigation stack setup and, as soon the robot is not moving, all seems to work fine (transformations published, map published, laser scan data published). When I move the robot using teleop the map->odom transformation broadcasted by gmapping seems to make no-sense. Better than other words is a video of RViz.

Apart the localization problem I cannot understand why the odom and base_link frame are not overlapped after start. They don't should be?

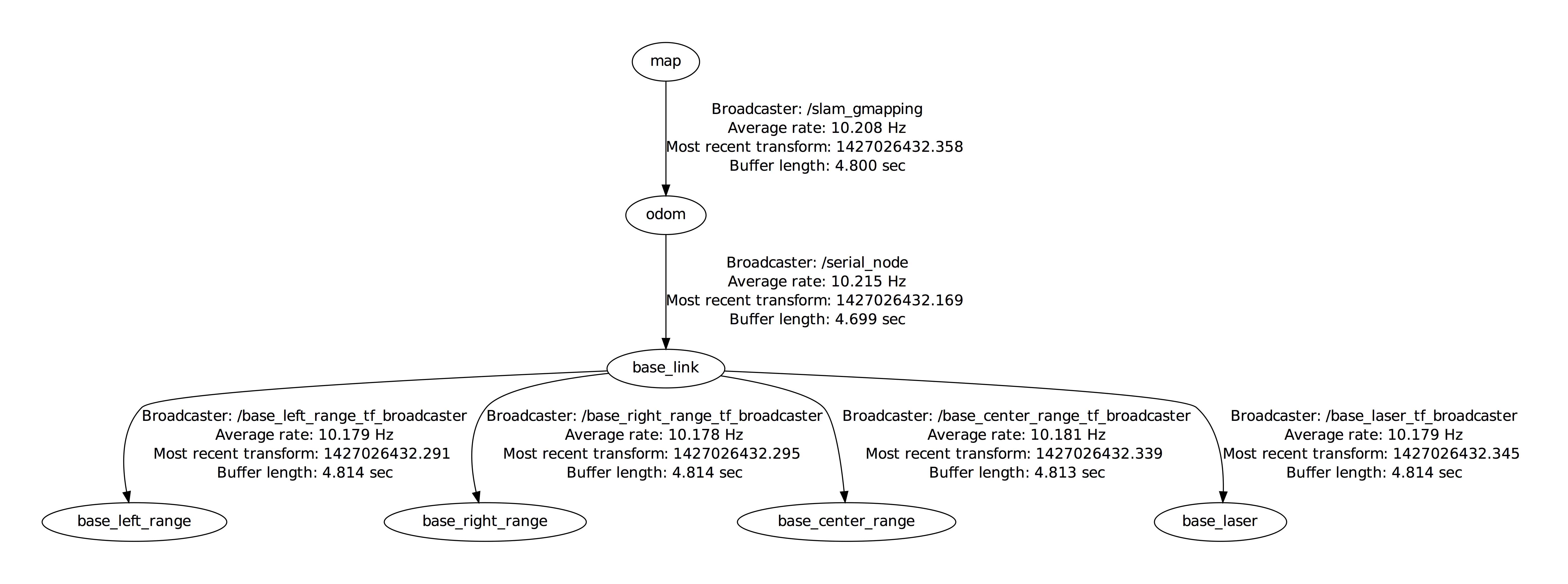

Here the transformations tree:

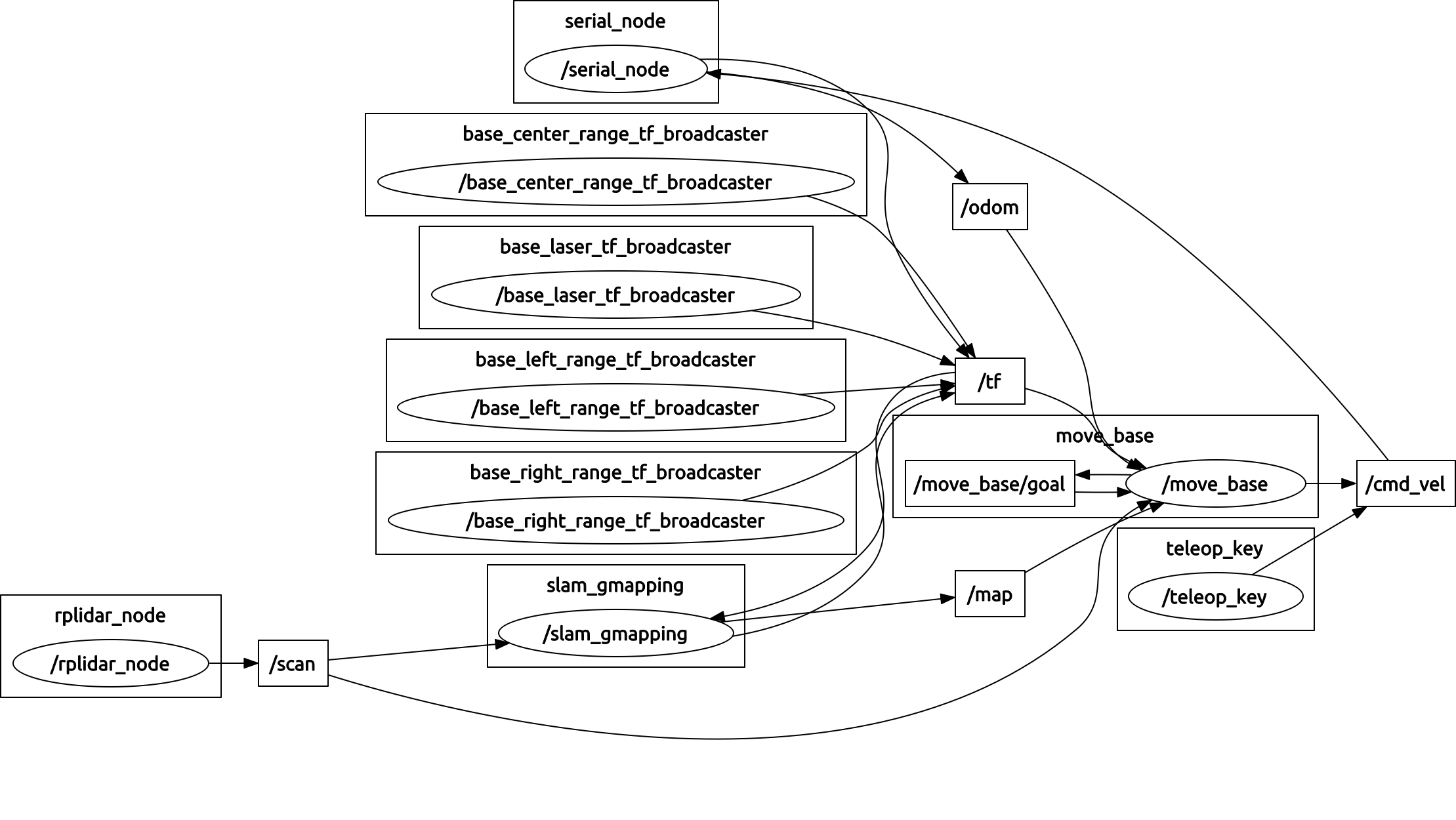

Here the nodes and topics:

This the gmapping node configuration:

throttle_scans: 1

base_frame: base_link

map_frame: map

odom_frame: odom

map_update_interval: 10

maxUrange: 5.5

maxRange: 6

sigma: 0.05

kernelSize: 1

lstep: 0.05

astep: 0.05

iterations: 5

lsigma: 0.075

ogain: 3.0

lskip: 0

minimumScore: 0.0

srr: 0.1

srt: 0.2

str: 0.1

stt: 0.2

linearUpdate: 1.0

angularUpdate: 0.5

temporalUpdate: -1.0

resampleThreshold: 0.5

particles: 30

xmin: -10

xmax: 10

ymin: -10

ymax: 10

delta: 0.05

llsamplerange: 0.01

llsamplestep: 0.01

asamplerange: 0.005

lasamplestep: 0.005

transform_publish_period: 0.1

occ_thresh: 0.25

I will really appreciate any suggestion to fix my problem. I did not publish other configurations since the problem seems to be related to gmapping: if other informations are needed I will be happy to provide them.

Many thanks! Ale

UPDATE

As suggested by paulbovbel I follow the guide test odometer quality. The result is quite good for straight path, a little bit less for rotation.

Watching the video I think the problem could not be in odometry: in the video the first seconds (until time 0:08) after robot starts moving all seems to be fine. During this time the position is updated based on odometry only (at least... I guess!) and laser scan data (in red) match the map: this means that odometer data is quite good. After 0:08 the map->odom transformation (broadcasted by gmapping) changes: in theory to compensate odometry drift but, at the end, it spoils the estimate position of the robot. After position estimation is spoiled also laser scan data make no sense and this cause builded map to be... a no-sense! This make sense or I make some mistake in my think?

Just to give more info: the video show the robot just a minute after system starts. When the video starts the robot was stopped in its initial position: for this reason I expect base_link, odom and map frame overlap (drift must be zero and robot it's in center of map).

UPDATE

I'm still working in order to fix this problem. I performed some test to check the quality of my odometry. On the attached image from RViz you can ...