Pointers towards using robot_localization for VO + Wheel odometry

Hey , i am trying to setup a EKF fusion for combining odometry coming from two topics

- Visual Odometry (X , Y and yaw)

- Wheel Encoders ( X , Y and yaw)

The main source of Odometry is coming from wheel encoders , the visual odometry is there much like a correction mechanism like while using IMUs. Having said that , my VO node publishes Twist values , is it better to fuse the wheel odometry using Twist values using robot_localization ?

I am getting the following error when i launch rviz to view the odometry

Assertion `!pos.isNaN() && "Invalid vector supplied as parameter"' failed.

I am confused with how the TF should be setup . The TF of wheel encoder odometry is as follows

frame_id: "odom"

child_frame_id: "base_link"

TF of the visual Odometry is as follows

frame_id: "raspicam"

child_frame_id: ''

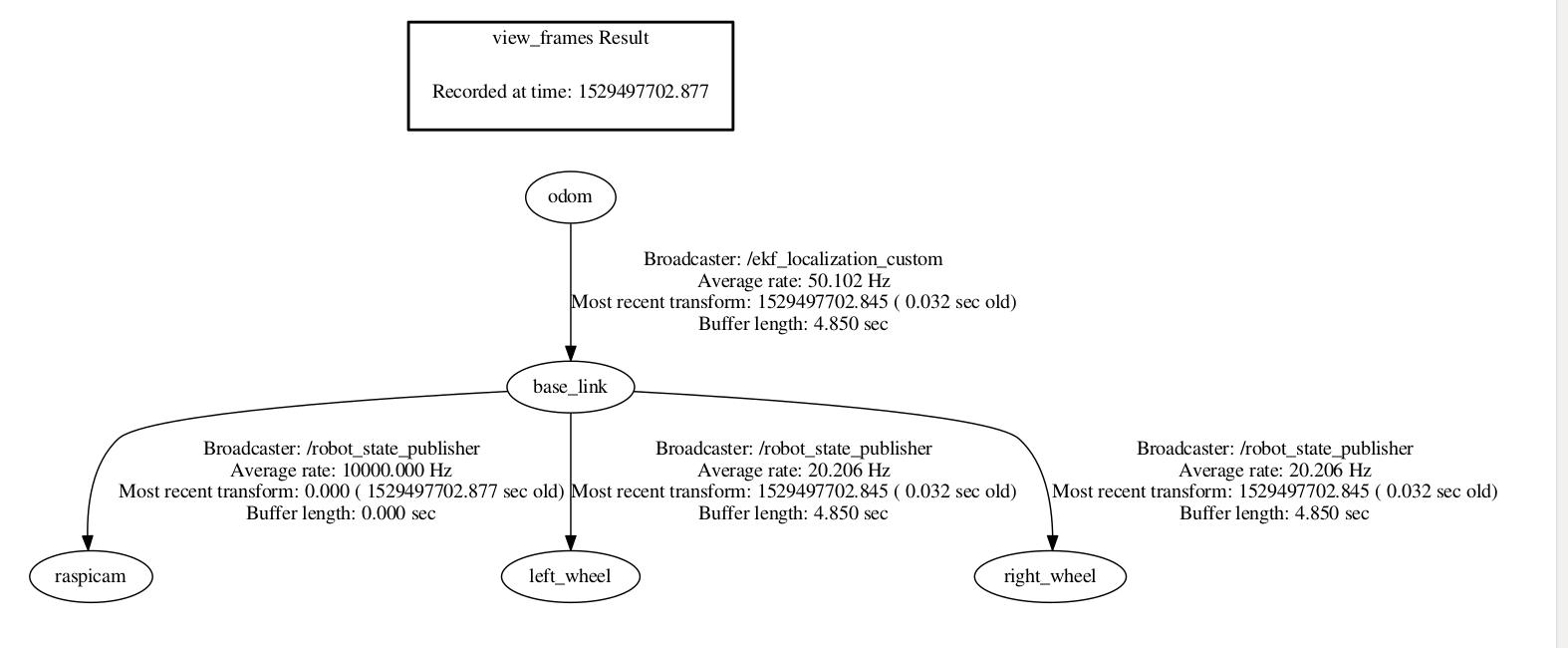

AFTER LAUNCHING THE EKF NODE

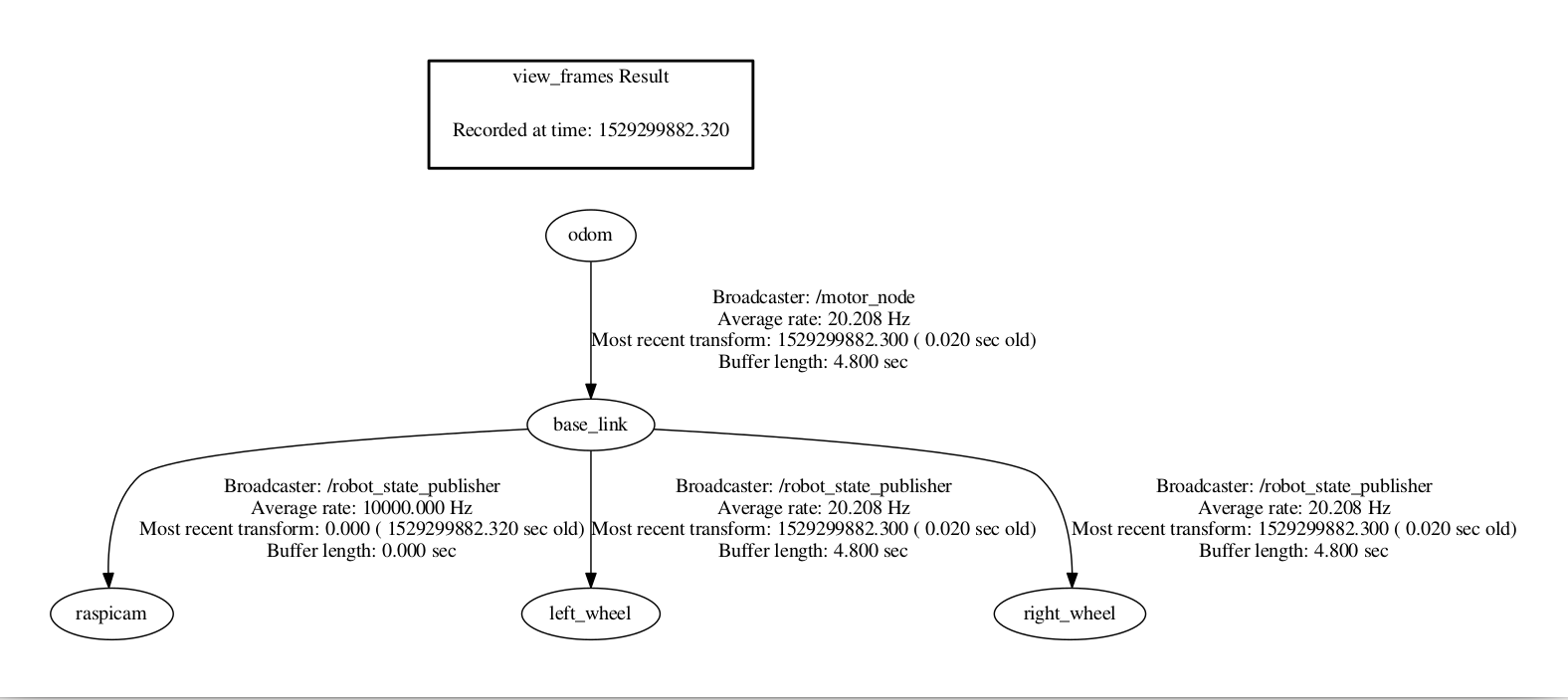

BEFORE LAUNCHING THE EKF NODE

* MY EKF LAUNCH FILE LOOKS LIKE THIS *

<node pkg="robot_localization" type="ekf_localization_node" name="ekf_localization_custom" clear_params="true"> <!-- renamed to ekf_localization_custom to prevent name conflicts -->

<param name="frequency" value="30"/> <!-- this value should be fine -->

<param name="sensor_timeout" value="0.1"/> <!-- this value should be fine -->

<param name="two_d_mode" value="false"/> <!-- could make this true to stick to XY plane.. -->

<!-- based these values off of the ROScon presentation -->

<param name="map_frame" value="map"/>

<param name="odom_frame" value="odom"/>

<param name="base_link_frame" value="base_link"/>

<param name="world_frame" value="odom"/>

<param name="odom0" value="/optical_flow"/>

<param name="odom1" value="/ubiquity_velocity_controller/odom"/>

<!-- settings for using Twist -->

<rosparam param="odom0_config">[false, false, false,

false, false, false,

true, true, false,

false, false, true,

false, false, false]</rosparam>

<rosparam param="odom1_config">[false, false, false,

false, false, true,

true, true, false,

false, false, true,

false, false, false]</rosparam>

<param name="odom0_differential" value="false"/>

<param name="odom1_differential" value="false"/>

<param name="publish_null_when_lost" value="false"/>

<param name="odom0_relative" value="false"/>

<param name="odom1_relative" value="false"/>

<param name="odom0_queue_size" value="10"/>

<remap from="/odometry/filtered" to="/odometry/visual" />

</node>

[EDIT #1 adding sensor data samples ]

Wheel Odometry

header:

seq: 3786

stamp:

secs: 1529497452

nsecs: 845127379

frame_id: "odom"

child_frame_id: "base_link"

pose:

pose:

position:

x: 0.50

y: 0.20

z: 0.0

orientation:

x: 0.0

y: 0.0

z: 0.01

w: 1.0

covariance: [0.2, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.2, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.2, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.2, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.2, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.2]

twist:

twist:

linear:

x: 0.8334

y: 0.0

z: 0.0

angular:

x: 0.0

y: 0.0

z: 0.1233

covariance: [0.2, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.2, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.2, 0.0, 0.0, 0.0, 0.0, 0.0, 0 ...

Please post sample messages for every sensor input.

Hey , I have edited the question and added the sample data . I think I have resolved the issue of rviz crashing , it was because the TF not set properly .

Now ,The covariance on the fussed output keeps building up into a larger and larger value. How do I systematically tackle this ?