robot_localization - angular drift when fusing IMU

I've run into an interesting issue. It seems that when I'm fusing angular velocity it ends up adding a significant angular drift to odometry output.

My robot platform is a differential_drive robot with a caster wheel. On my robot I have two IMUs: one in the front (Realsense Tracking camera) and another one in the back (Phidgets Spatial IMU).

Here is my starting robot_localization config:

odom_frame: odom

base_link_frame: base_link

world_frame: odom

publish_tf: true

frequency: 25

two_d_mode: true

odom0: /rr_robot/mobile_base_controller/odom

odom0_config: [false, false, false,

false, false, false,

true, true, false,

false, false, false,

false, false, false]

odom0_differential: false

# imu0: /rs_t265/imu

imu0: /imu/data_raw

imu0_config: [false, false, false,

false, false, false,

false, false, false,

true, true, true,

false, false, false]

imu0_differential: false

imu0_relative: true

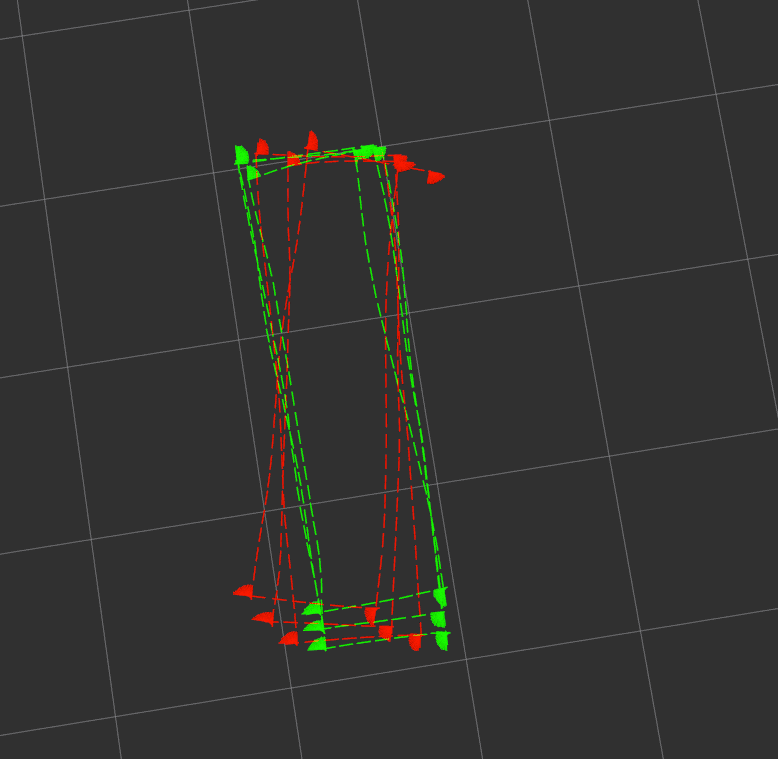

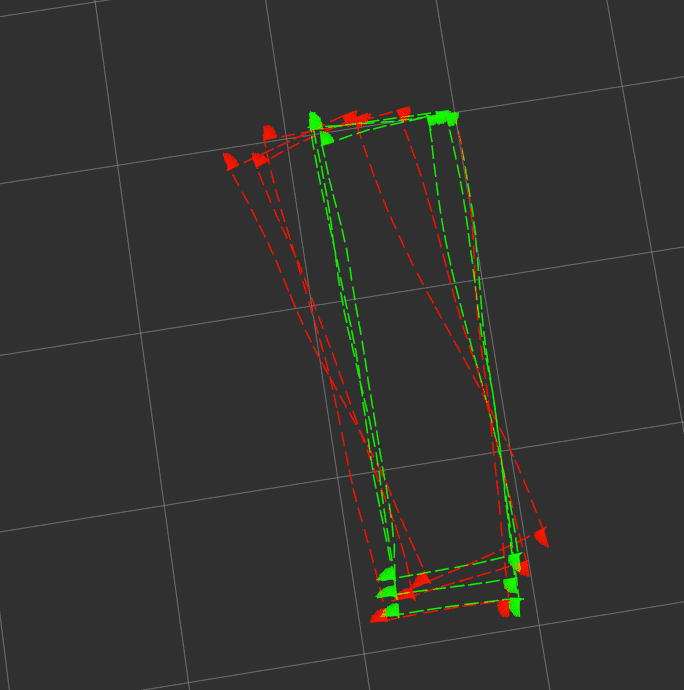

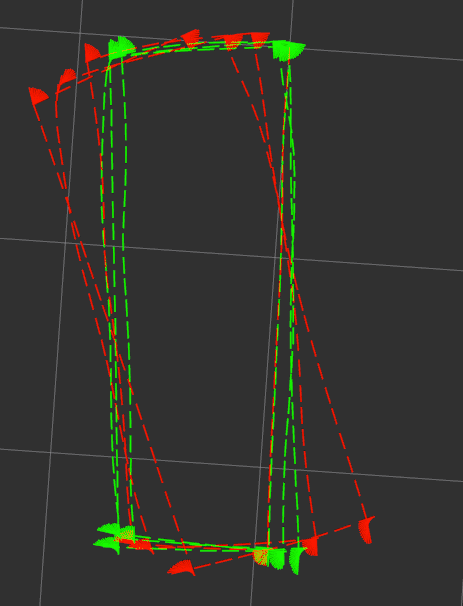

Here is the output for the /rs_t265/imu topic (mounted in front):

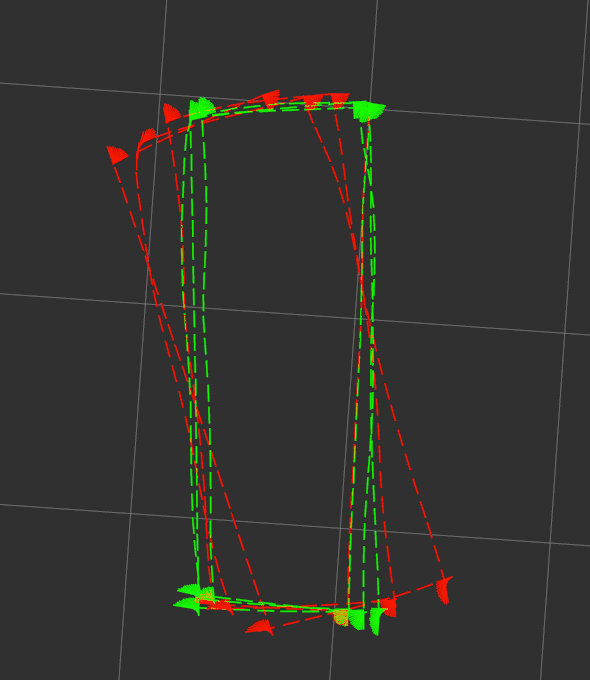

and here is the output when using Phidgets (mounted in the back of the robot):

The green odometry in the picture is the wheel odometry that is very close to the actual path traveled by the robot. The odometry marked in red is the output of robot_localization package.

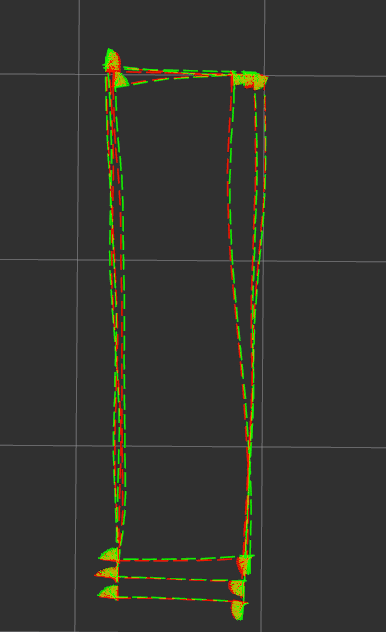

Almost all sources I've seen on the internet suggest fusing x and y velocity from the odometry topic and angular velocities of the IMU (magnetometer doesn't work well enough for me due to magnetic interference). The only way I can get the fused odometry appear much closer to the wheel odometry is by fusing the yaw of odom0 message, however it seems to make the filter not take the imu into account very much:

Am I'm missing something in my setup? I think I read up all the information on robot_localization that's available on the internet and didn't come to any obvious conclusions. I'd appreciate any feedback you might have!

EDIT: Some additional information: For each of the IMU setups the sensor frame seems to be correctly specified in the TF tree and is correctly contained in the appropriate IMU message fields.

EDIT2: Output when using madgwick filter to produce an IMU message with orientation field and fusing the resultant yaw looks as follows:

Unfortunately in 3 of the 4 corners I turn at there are huge metallic object affecting the magnetometer.

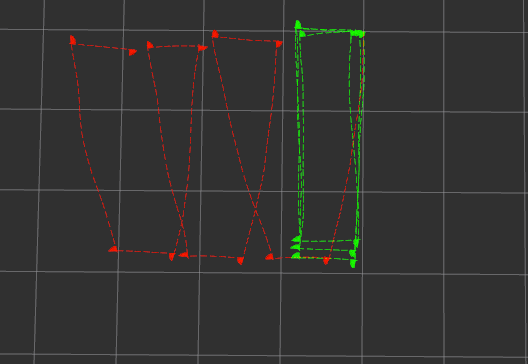

EDIT3: Trying to fuse yaw rate from wheel odom.

Before yaw rate fusion:

After fusing yaw rate:

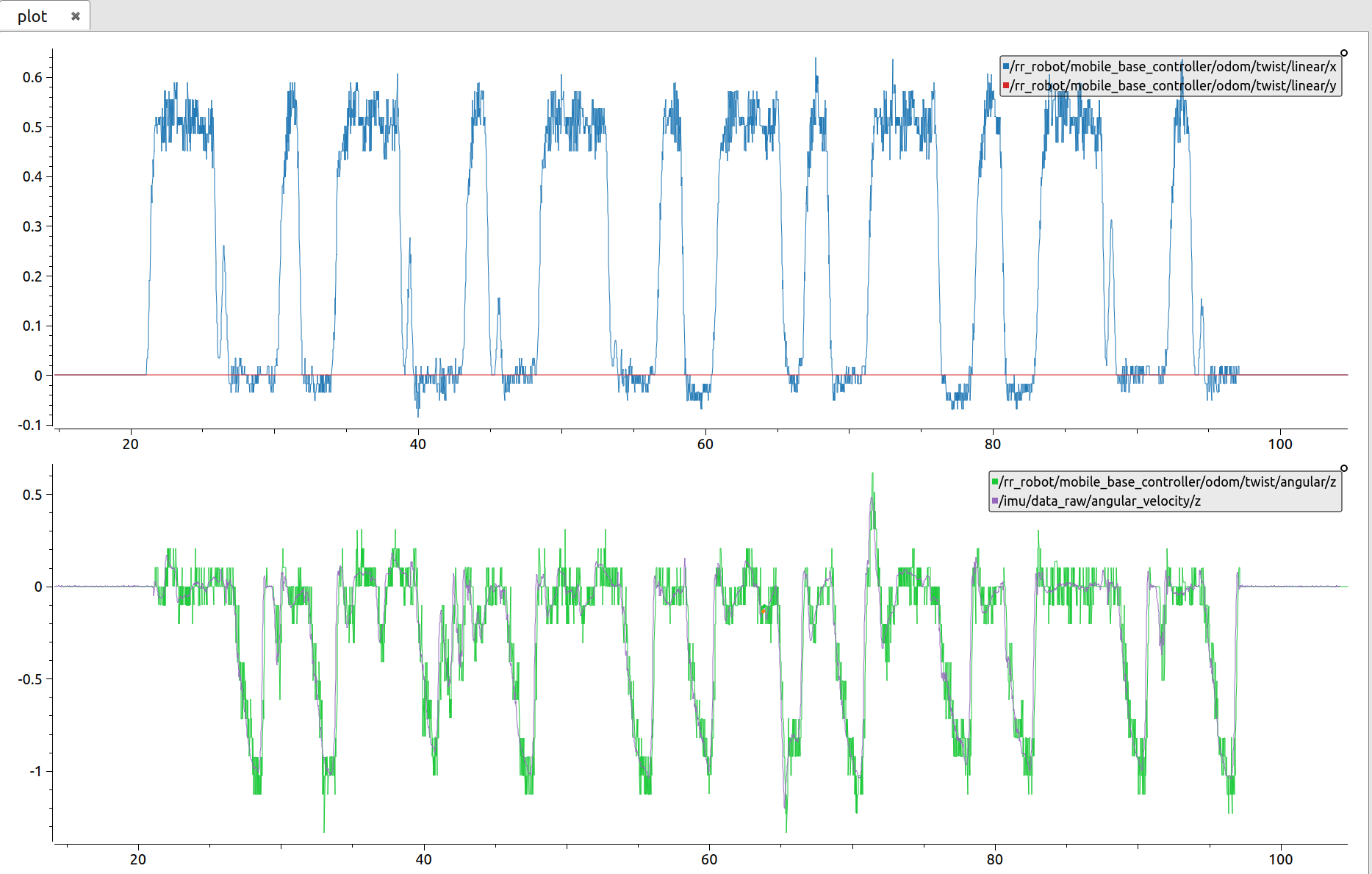

When I said that my platform used encoders I lied a bit - actually I only have data from the motor's hall sensors. This makes the data quite noisy, below you can see a graph with wheel odom velocity on x and y and yaw rates (from wheel odom (green) and imu (purple). Would this noise contribute to my issue?

EDIT4: Sample sensor input:

Wheel odometry(/rr_robot/mobile_base_controller/odom):

header:

seq: 2443

stamp:

secs: 1579080868

nsecs: 941759306

frame_id: "odom"

child_frame_id: "base_link"

pose:

pose:

position:

x: 2.38348355885

y: 0.0650385644131

z: 0.0

orientation:

x: 0.0

y: 0.0

z: -0.502680731125

w: 0.864472140994

covariance: [0 ...

Do any of the IMUs give you out the orientation? I'd use that over others. You may want to try putting one of the IMUs through a complementary filter and then the output orientation into R_L.

One of the IMUs gives out orientation, however, the environment I run the robot in contains huge metallic object near 3 of the corners I turn at. I'll edit the question to add the visualisation of this one. It's actually using Madgwick and should fuse magnetometer with other sensors.

Are you _sure_ all the sensors are being fused? I presume you are using a bag file to get repeatable results; how are you modifying the covariance values in your bag file? Did you define static transforms for the IMU data frame_ids? And are you plotting the right output topic? The fact that _nothing_ changes the output leads me to believe one of the inputs is being ignored. What happens if you turn off the IMU input?

So the filter is clearly trusting your IMU far more than your odom data. At this point, I would turn on debug mode (set the debug output file to an absolute path), let the robot go through a single turn, then quickly kill it. Zip that and post it here.

Edited the question and posted a google drive link to the zipped debug file.

BTW, you have

two_d_mode, but are fusing roll and pitch data. Those are going to get ignored.